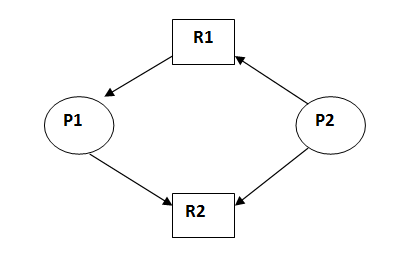

A calm edge denotes that a request may be made in future and is represented as a dashed line. An edge from a process to resource is a request edge and an edge from a resource to process is an allocation edge. The resource allocation graph has request edges and assignment edges. Vertices of the resource allocation graph are resources and processes. If there is only one instance of every resource, then a cycle implies a deadlock. If there are cycles, there may be a deadlock. If there are no cycles in the resource allocation graph, then there are no deadlocks. Since resource allocation is not done right away in some cases, deadlock avoidance algorithms also suffer from low resource utilization problem.Ī resource allocation graph is generally used to avoid deadlocks. Deadlock avoidance algorithms try not to allocate resources to a process if it will make the system in an unsafe state. A safe sequence of processes and allocation of resources ensures a safe state.

A system can be considered to be in safe state if it is not in a state of deadlock and can allocate resources upto the maximum available. Deadlocks cannot be avoided in an unsafe state. If a system is already in a safe state, we can try to stay away from an unsafe state and avoid deadlock. Based on all these info we may decide if a process should wait for a resource or not, and thus avoid chances for circular wait. Most deadlock avoidance algorithms need every process to tell in advance the maximum number of resources of each type that it may need. Instead, we can try to avoid deadlocks by making use prior knowledge about the usage of resources by processes including resources available, resources allocated, future requests and future releases by processes. The algorithm may itself increase complexity and may also lead to poor resource utilization.Īs you saw already, most prevention algorithms have poor resource utilization, and hence result in reduced throughputs. To avoid circular wait, resources may be ordered and we can ensure that each process can request resources only in an increasing order of these numbers. The challenge here is that the resources can be preempted only if we can save the current state can be saved and processes could be restarted later from the saved state. In the second approach, if a process request for a resource which are not readily available, all other resources that it holds are preempted. If a process request for a resource which is held by another waiting resource, then the resource may be preempted from the other waiting resource. This may result in a starvation as all required resources might not be available freely always. Second approach is to request for a resource only when it is not holing any other resource. They could have been used by other processes during this time. This will avoid deadlock, but will result in reduced throughputs as resources are held by processes even when they are not needed.

We will see two approaches, but both have their disadvantages.Ī resource can get all required resources before it start execution. Not always possible to prevent deadlock by preventing mutual exclusion (making all resources shareable) as certain resources are cannot be shared safely. However most prevention algorithms have poor resource utilization, and hence result in reduced throughputs.

We can try to prevent or avoid deadlock, and if that doesn’t work out, we should detect deadlock and try to recover from deadlock.ĭeadlock prevention algorithms ensure that at least one of the necessary conditions (Mutual exclusion, hold and wait, no preemption and circular wait) does not hold true. You have already seen what deadlock is and the necessary conditions for a deadlock to happen.

0 kommentar(er)

0 kommentar(er)